What happens when you take a ball of human brain cells, hook it up to a computer chip, and ask it to recognize human speech? You get a setup called Brainoware, and no, it's not satire. Researchers grew tiny brain organoids from stem cells, plugged them into multielectrode arrays, and discovered they can actually process spoken words. Not only that—they learn.

This isn’t about mimicking a brain using silicon or pretending a neural net can replicate the insane complexity of biological tissue. This is the biological tissue. Actual brain matter, trained with electrical pulses and monitored like a lab rat at a rave. And it turns out, it’s good at stuff. Like really good. As we get closer to the Singularity, silver is going to be used even more.

How It Works: Training Human Brains to Speak Computer

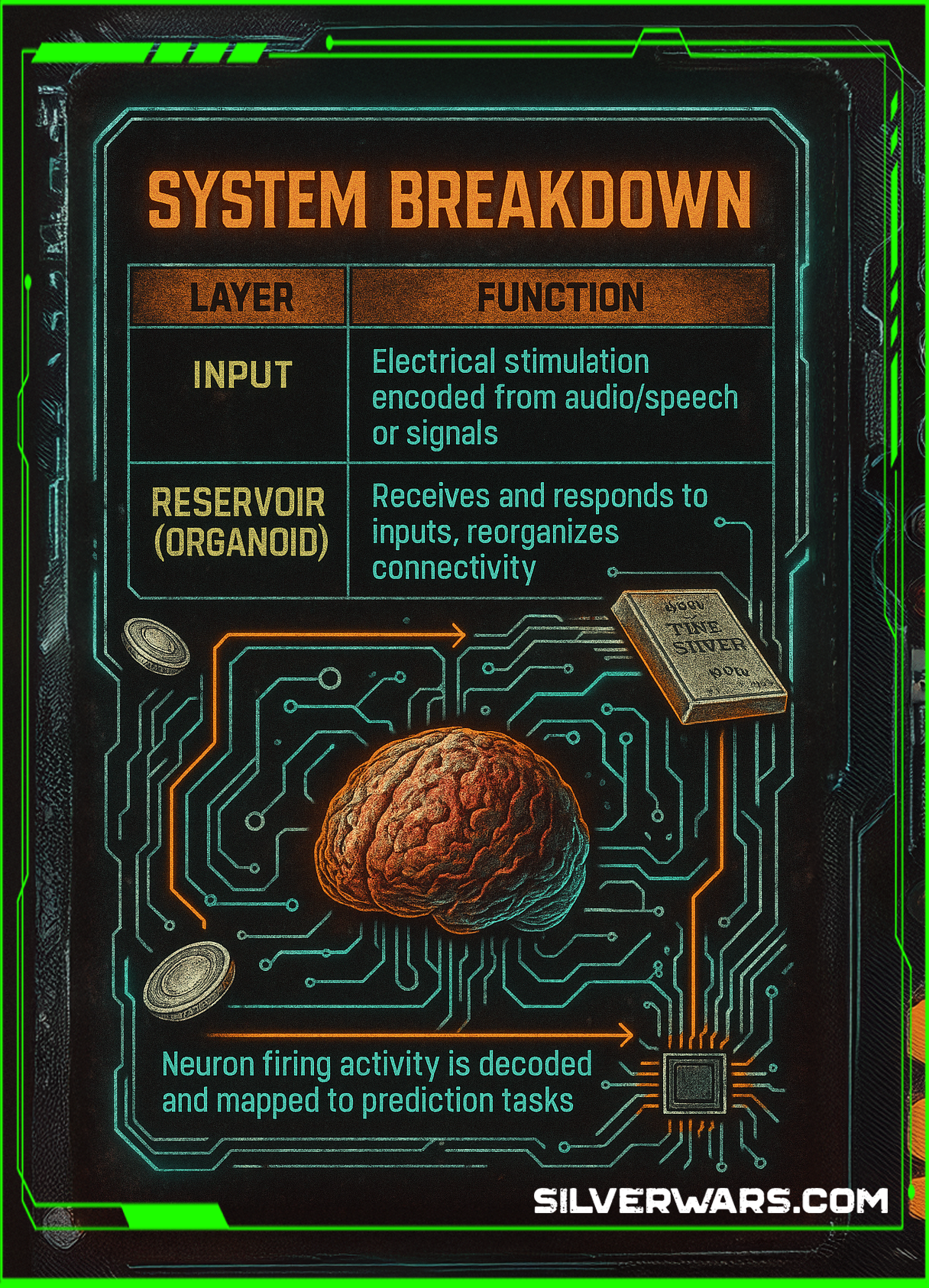

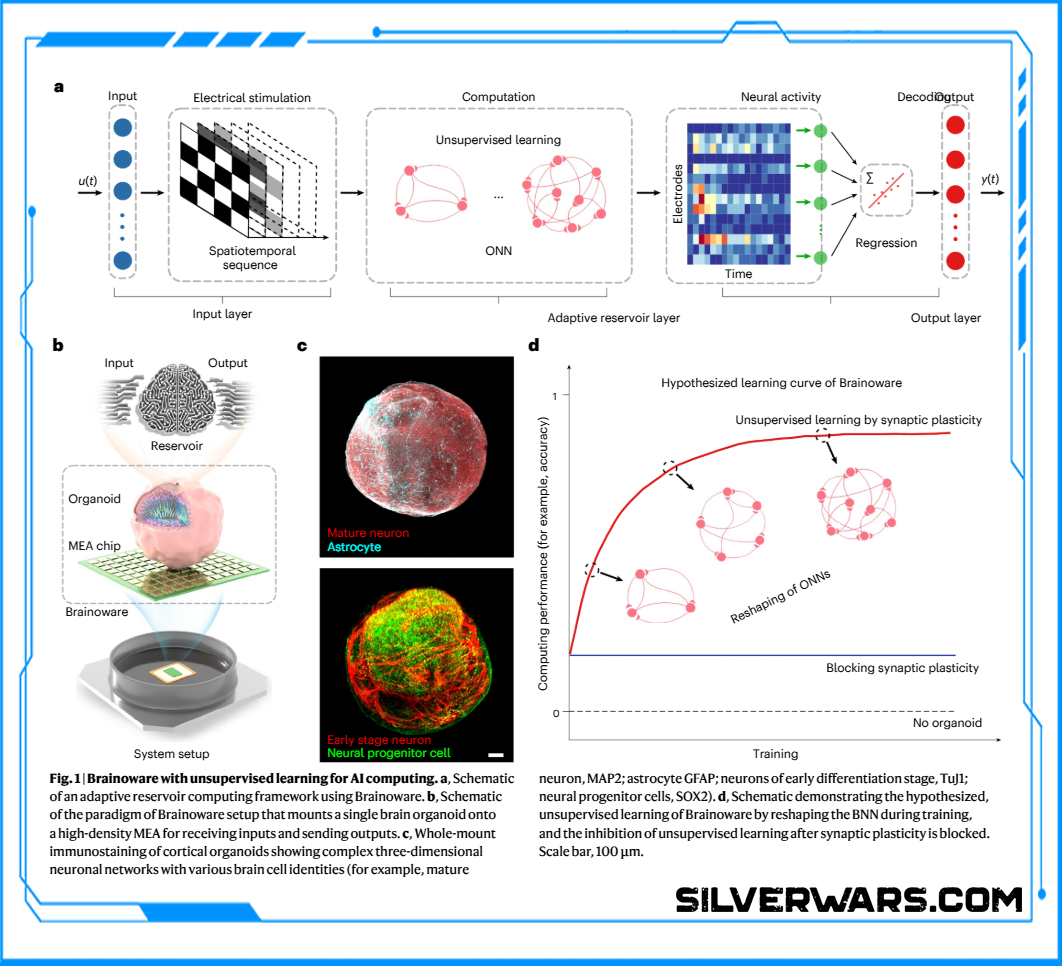

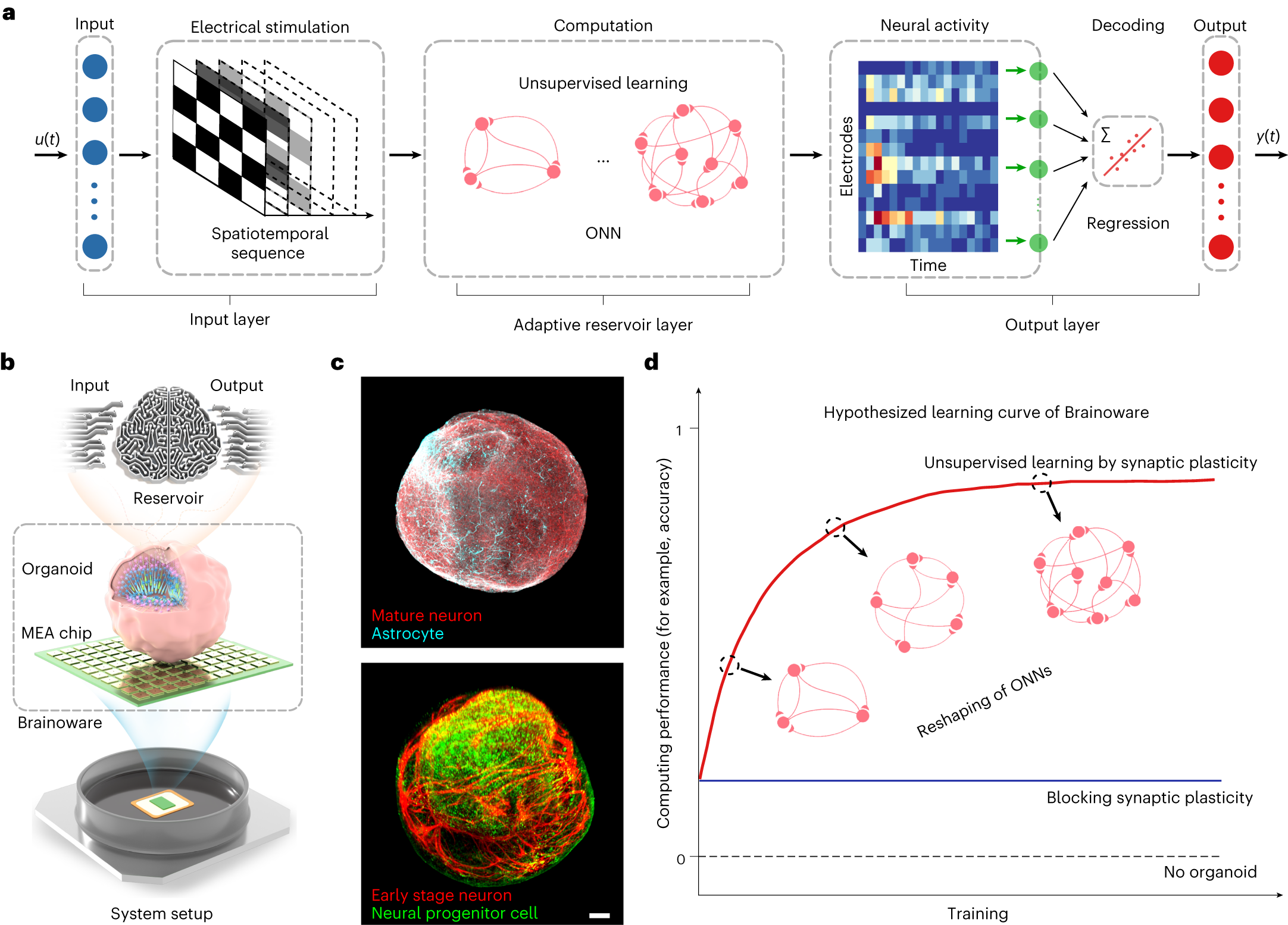

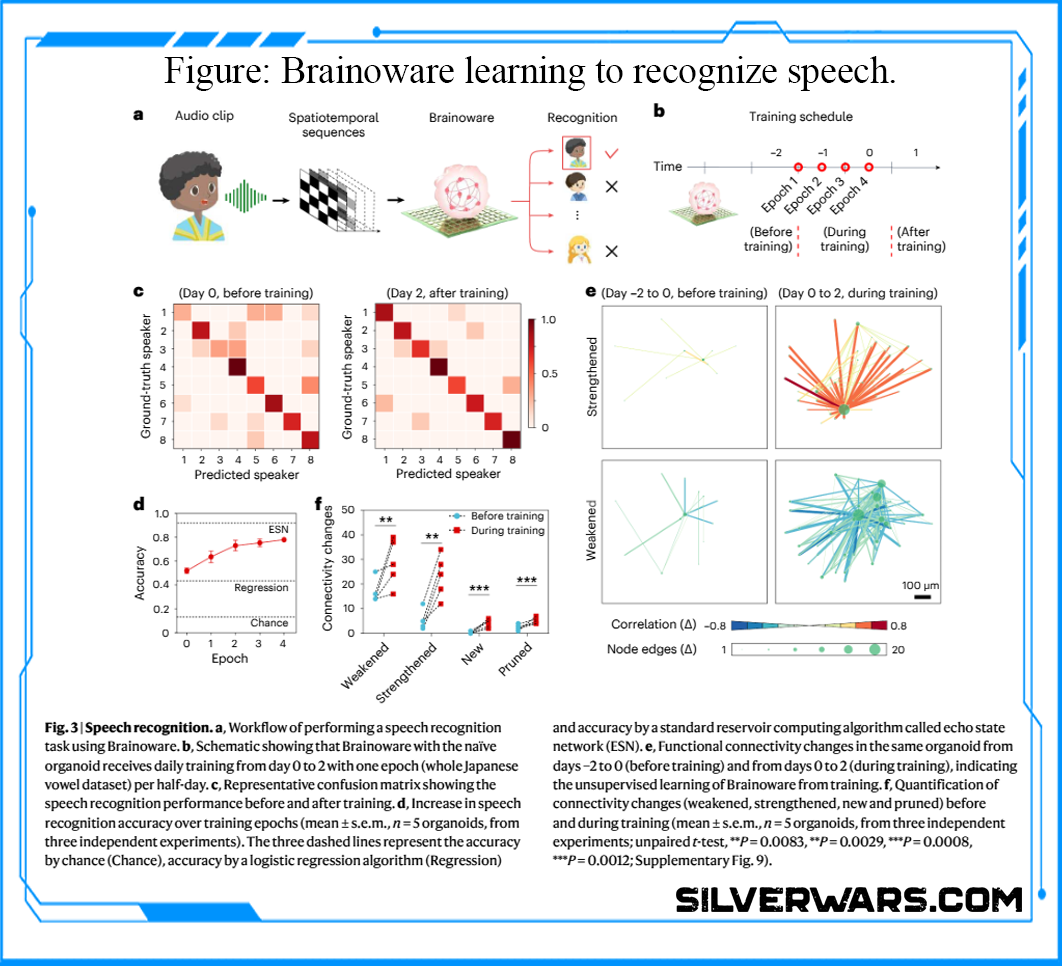

Let’s start with the layout. The researchers used brain organoids—basically tiny clusters of neurons grown from human pluripotent stem cells. These little guys were placed onto high-density multi-electrode arrays (MEAs) which can send and receive signals. So what you get is a feedback loop: stimulate the brain blob with spatiotemporal electrical pulses, record its response, and adjust from there.

They call the full setup Brainoware. And what it does is adapt—organically. It learns through unsupervised learning, not by brute-force labeling like typical machine learning models, but by reshaping its internal network in response to the stimulation.

System Breakdown

| Layer | Function |

|---|---|

| Input | Electrical stimulation encoded from audio/speech or signals |

| Reservoir (Organoid) | Receives and responds to inputs, reorganizes connectivity |

| Output | Neuron firing activity is decoded and mapped to prediction tasks |

The organoid doesn’t just passively take signals. It changes—nonlinear dynamics, fading memory, synaptic plasticity, the whole biological bag. When plasticity was blocked using K252a (a synaptic blocker), the organoids' ability to learn vanished, but their basic computing activity remained. In short: these blobs are smart only if you let them change.

Looking to diversify your portfolio with tangible assets? Jim Cook at Investment Rarities offers expertly curated asset investments with their extremely dedicated team. Discover unique opportunities often overlooked by traditional markets. Visit InvestmentRarities.com Today!

Speech Recognition—No Chips, Just Brains

The star application in this research? Speech recognition. The researchers used audio clips of eight male speakers pronouncing Japanese vowels. The audio was encoded into patterns of electrical stimulation and delivered to the brain organoid.

Before training, the organoid sucked at this. Confusion matrices from day zero showed it could barely tell who said what. But after two days of exposure and training—just two epochs—the organoid began recognizing patterns.

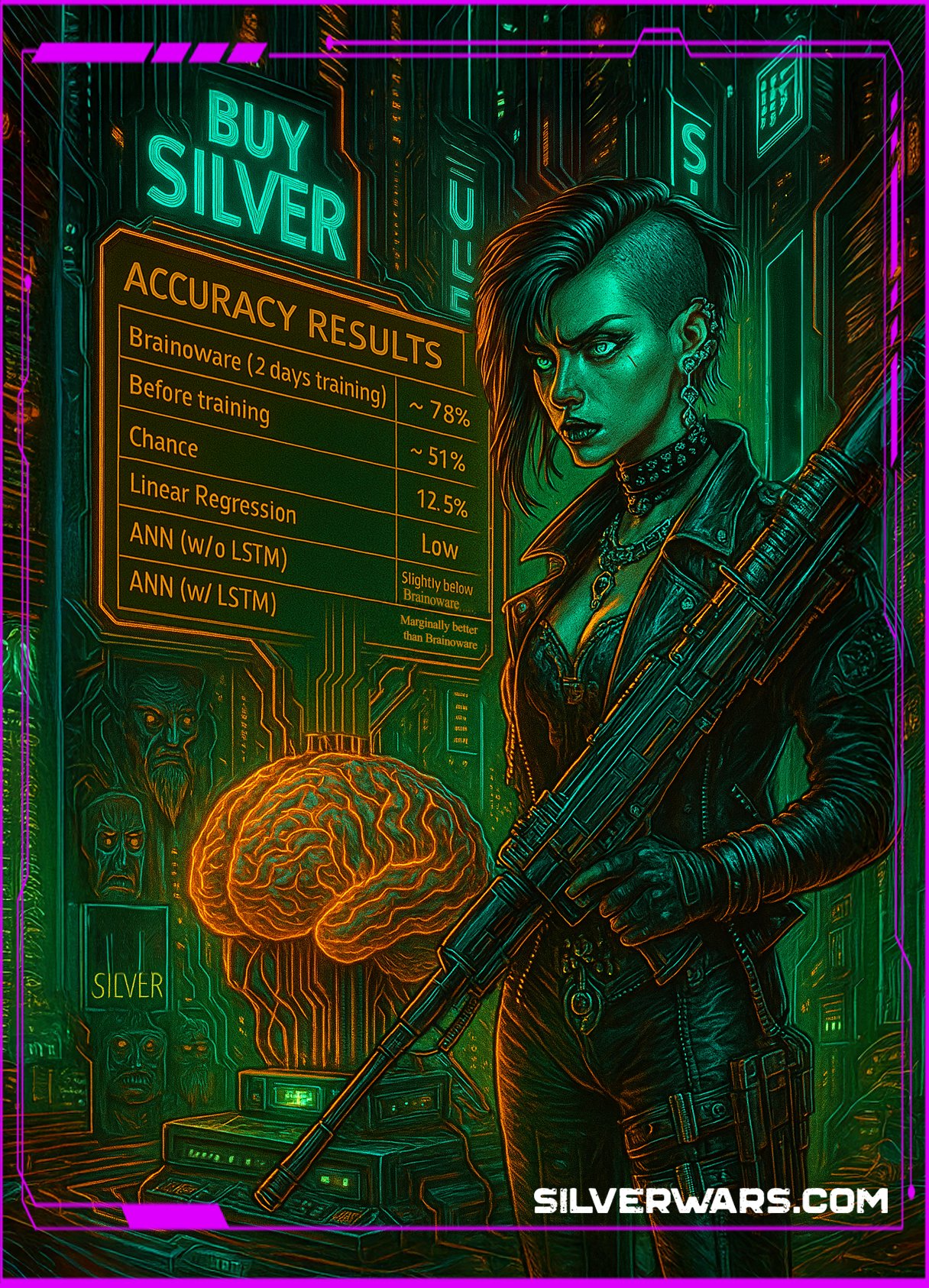

Accuracy Results

| Model | Accuracy |

| Brainoware (2 days training) | ~78% |

| Before training | ~51% |

| Chance | 12.5% |

| Linear Regression | Low |

| ANN (w/o LSTM) | Slightly below Brainoware |

| ANN (w/ LSTM) | Marginally better than Brainoware |

This puts Brainoware in the territory of serious speech recognition systems—not perfect, but clearly intelligent. And it did this with no backpropagation, no labeled data, and only a handful of training samples.

Predicting Nonlinear Systems with a Brain Blob

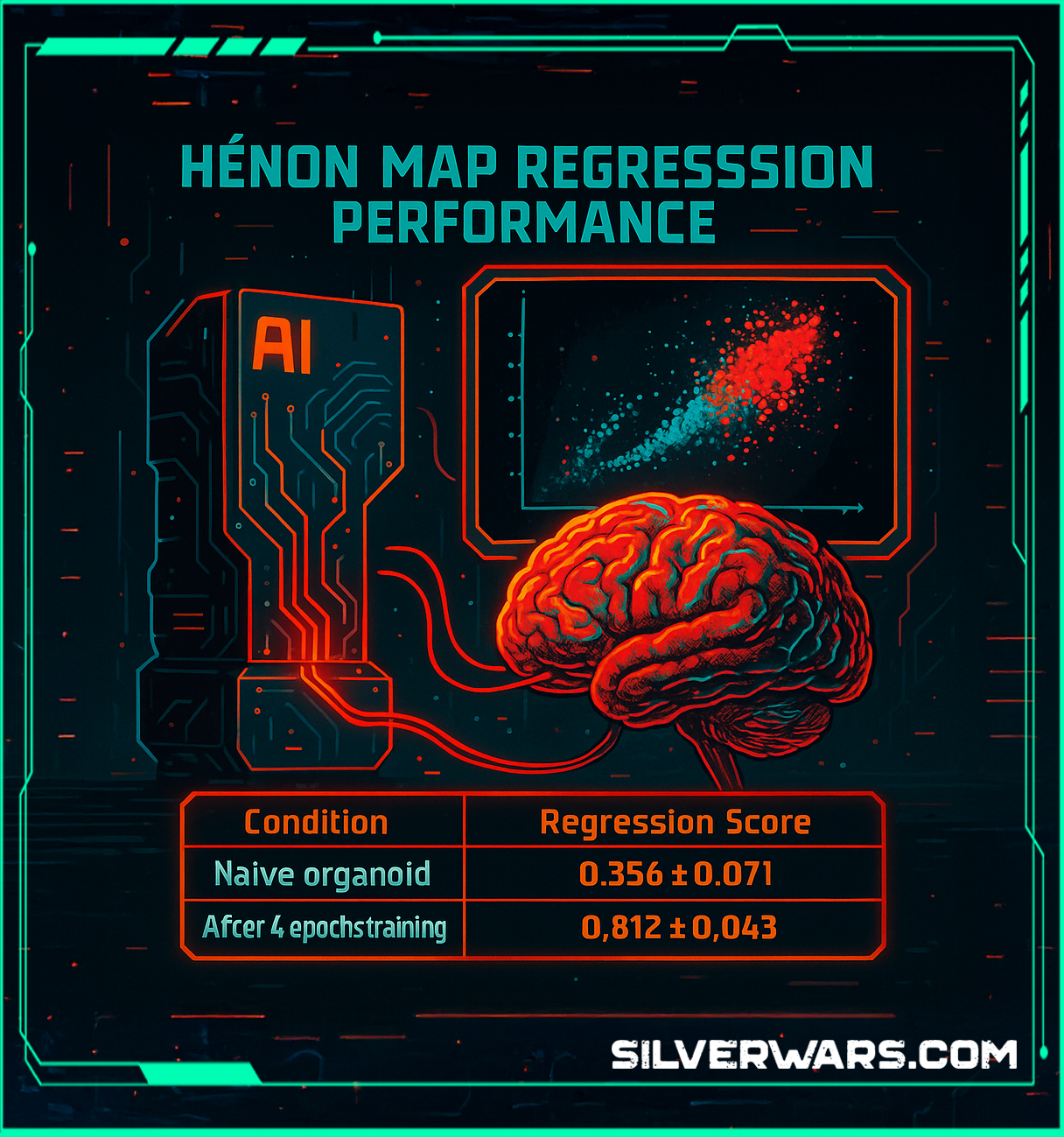

If speech recognition wasn’t enough, the researchers upped the ante by tasking the organoids with solving a nonlinear chaotic equation—the Hénon map. They converted the equation into a stream of spatiotemporal pulse patterns and fed them into Brainoware. Over just four training epochs, performance shot up dramatically.

Hénon Map Regression Performance

| Condition | Regression Score |

| Naïve organoid | 0.356 ± 0.071 |

| After 4 epochs training | 0.812 ± 0.043 |

When the same test was run on organoids treated with a synaptic plasticity blocker, performance tanked. They couldn’t learn. The underlying structure matters—a lot.

Functional Connectivity Isn't Just For Show

What made all this possible was not brute computation but dynamic functional connectivity. The networks inside these organoids changed their wiring on the fly, strengthening or weakening connections as needed. Visualization of the network edges before and after training showed:

- Strengthened pathways during training

- New edges formed

- Old paths pruned

And all this without any external supervision. The implication here is wild: you can teach brain matter to perform computing tasks by electrostimulation alone.

Though clearly meant for demonstration purposes, this could be the future soon.

Silver’s Medical Usage Keeps Growing

So where does silver fit into all of this? MEA chips, the core tech used to talk to brain organoids, rely on highly conductive materials. Silver is favored for its biocompatibility, antimicrobial properties, and excellent electrical conductivity. As brain-organ interfaces become more sophisticated—and more commercial—silver demand in neurotech will rise.

Silver already plays a major role in:

- Wearable bioelectronic patches

- Wound dressings

- Catheters and tubing

- Surgical instruments

- Medicines and ointments

Now we can add: interfaces for synthetic-brain computing. We're talking lab-grown gray matter being wired up with silver-conductive systems to do jobs once reserved for silicon and carbon.

The Silver Brain Wars Have Just Begun...

Let’s be honest with our reality—this is not a toy project or a tech demo. This is a biological computing substrate, showing real learning, memory, and pattern recognition capacity. It’s adapting in real time, using spiking neurons in a wet interface, not binary digits in a dry wafer. And it’s doing so with power efficiency that embarrasses even the best modern AI hardware.

So while everyone’s out here LARPing about AGI, these researchers are literally teaching blobs of neurons how to understand speech. And that blob is plugged into a silver-electrode interface that may redefine both neurobiology and computing.

The properties of silver propel humanity towards what most would of considered science fiction twenty years ago, for good or ill.